The AWS Command Line Interface is a unified tool that provides a consistent interface for interacting with all parts of AWS.

This Blog explains frequently used concepts and commands for S3 CLI Tasks. It is widely used to save time, and customize and automate S3 Storage Tasks.

Let's Dive-In,

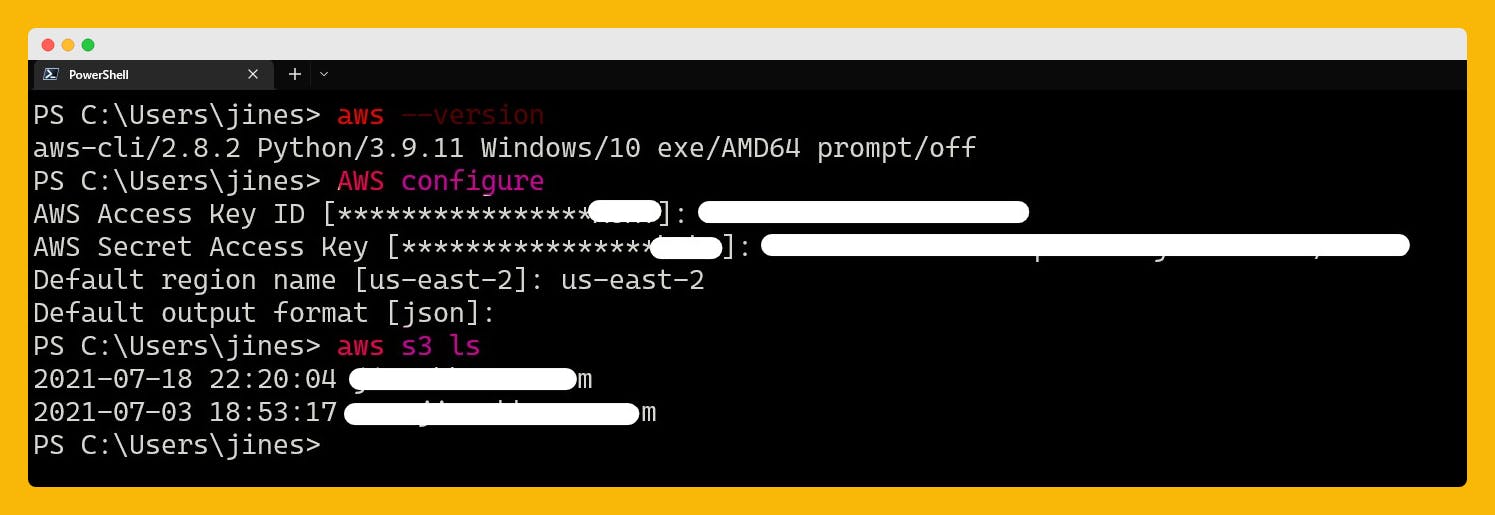

- Install AWS CLI by following up here, as per your OS and Processor.

- Now, it’s time to configure the AWS profile. Use “AWS configure”

It will need Admin User's (AdministratorAccess) AWS Access Key ID: and AWS Secret Access Key:

(find your credentials under AWS console > IAM > Users > Create/Choose your Admin User > Under Security Credentials tab > Create Access Key (Securely Save this .cve file) And put those key info in the prompt for "AWS configure" sign in on AWS CLI. Example, I am in, verified by "aws s3 ls" command which listed available S3 buckets with its creation date and time.

I am in, verified by "aws s3 ls" command which listed available S3 buckets with its creation date and time.

Now We will go to list all useful commands for S3 CLI which will be useful to save you time, and automate S3 Storage operations with customization options.

Note : Assume your local directory and Your unique S3 Bucket Names respectively in below examples and screenshots.

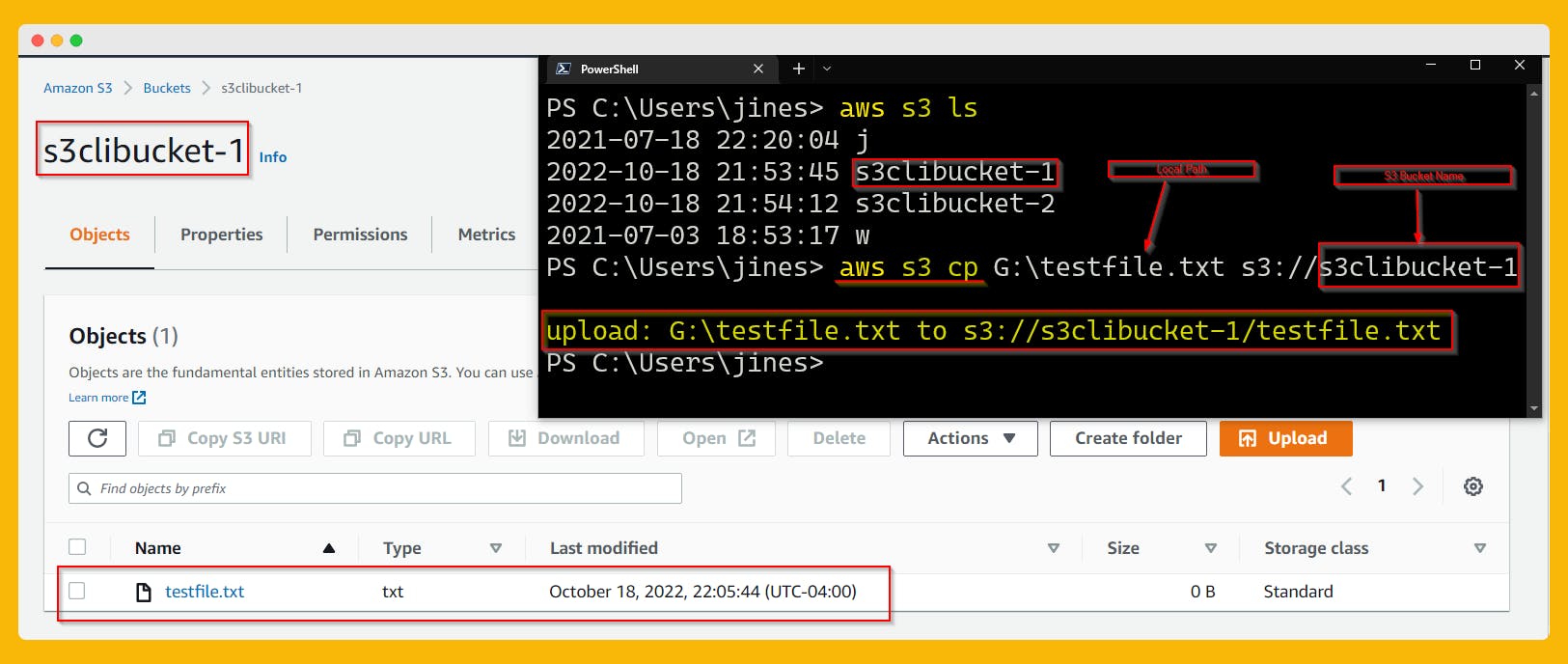

AWS S3 Copy : aws s3 cp

Copy local file or S3 object to another S3 bucket or locally

# Copying a local file to S3

aws s3 cp test.txt s3://mybucket/test2.txt

# Copying a file from S3 to S3

aws s3 cp s3://mybucket/test.txt s3://mybucket/test2.txt

# Copying an S3 object to a local file

aws s3 cp s3://mybucket/test.txt G:\test2.txt

# Copying an S3 object from one bucket to another

aws s3 cp s3://mybucket/test.txt s3://mybucket2/

# Setting the Access Control List (ACL) while copying S3 object

aws s3 cp s3://mybucket/test.txt s3://mybucket/test2.txt --acl public-read-write

# Recursively copying

aws s3 cp s3://mybucket D:\testfolder --recursive

aws s3 cp D:\testfolder s3://mybucket/ --recursive

aws s3 cp s3://mybucket/ s3://mybucket2/ --recursive

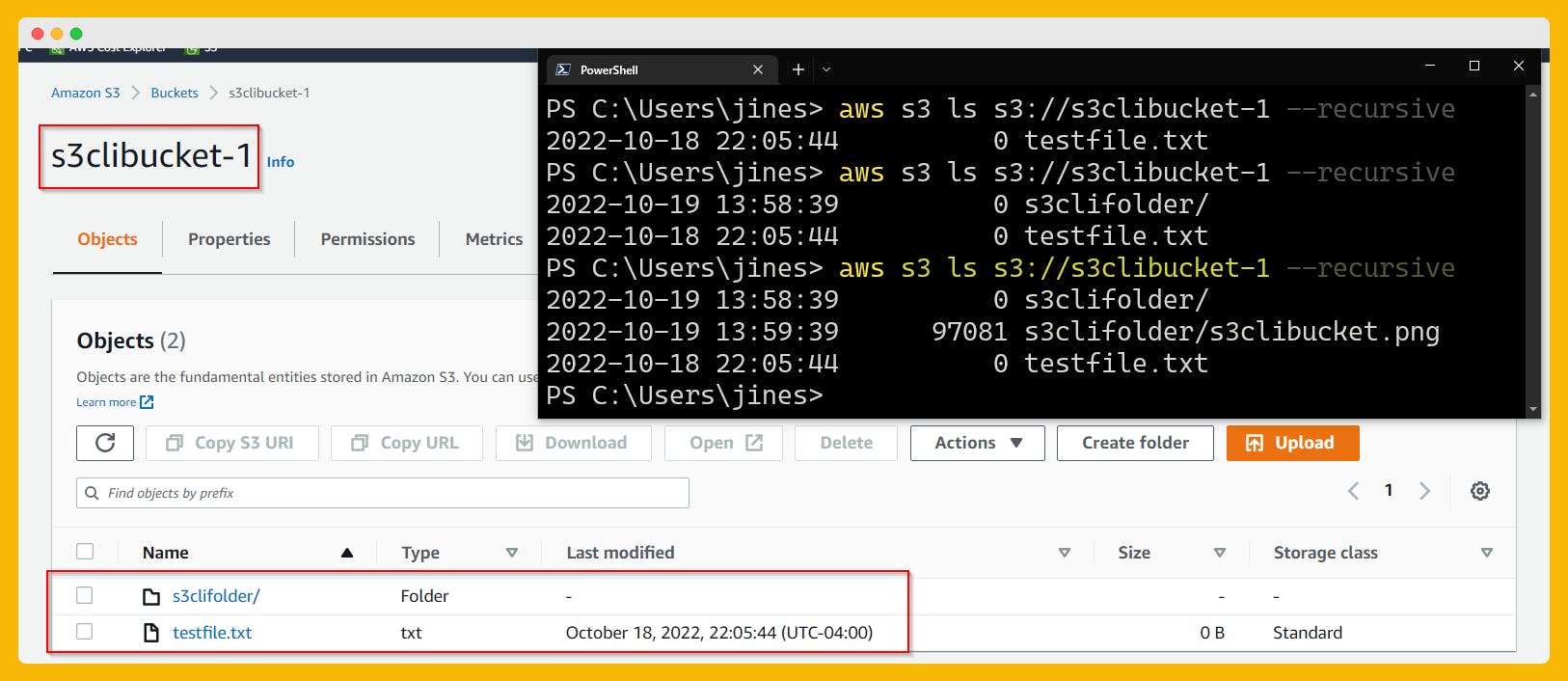

AWS S3 List : aws s3 ls

List S3 objects and common prefixes under a prefix or all S3 buckets

# Lists all of the buckets owned by the user

aws s3 ls

# Recursively list objects in a bucket

aws s3 ls s3://mybucket --recursive

# Recursively list objects in a bucket with human-readable

and summarize option

aws s3 ls s3://mybucket --recursive --human-readable --summarize

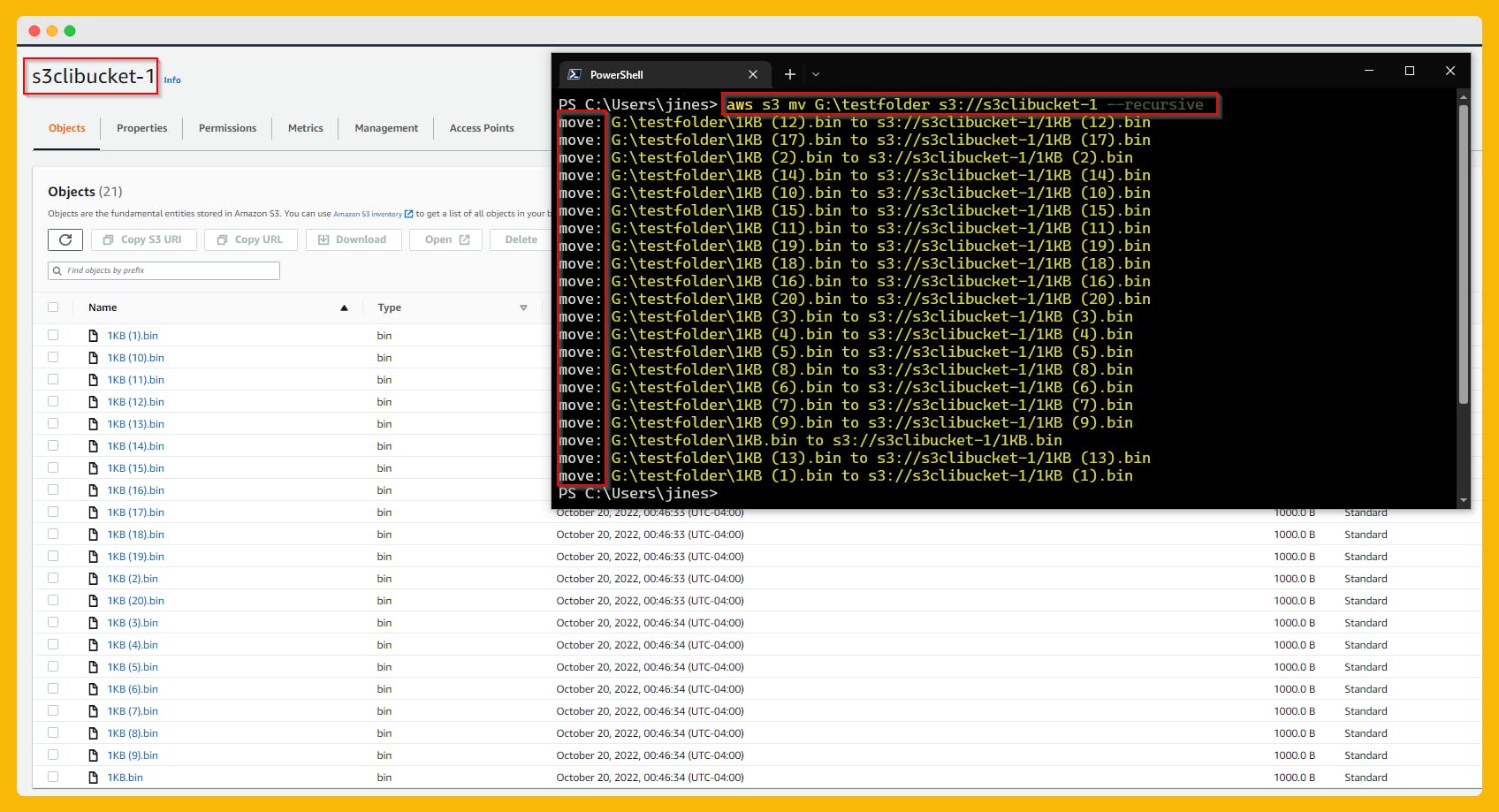

AWS S3 Move : aws s3 mv

Move local file or S3 object to another S3 bucket or locally

# Moves single/multi s3 object/s to a specified bucket

- Changing the name of the target bucket file optionally.

aws s3 mv G:\test.txt s3://mybucket/test2.txt

# Move From local folder objects to move to s3 bucket

aws s3 mv G:\testfolder s3://mybucket/test2.txt

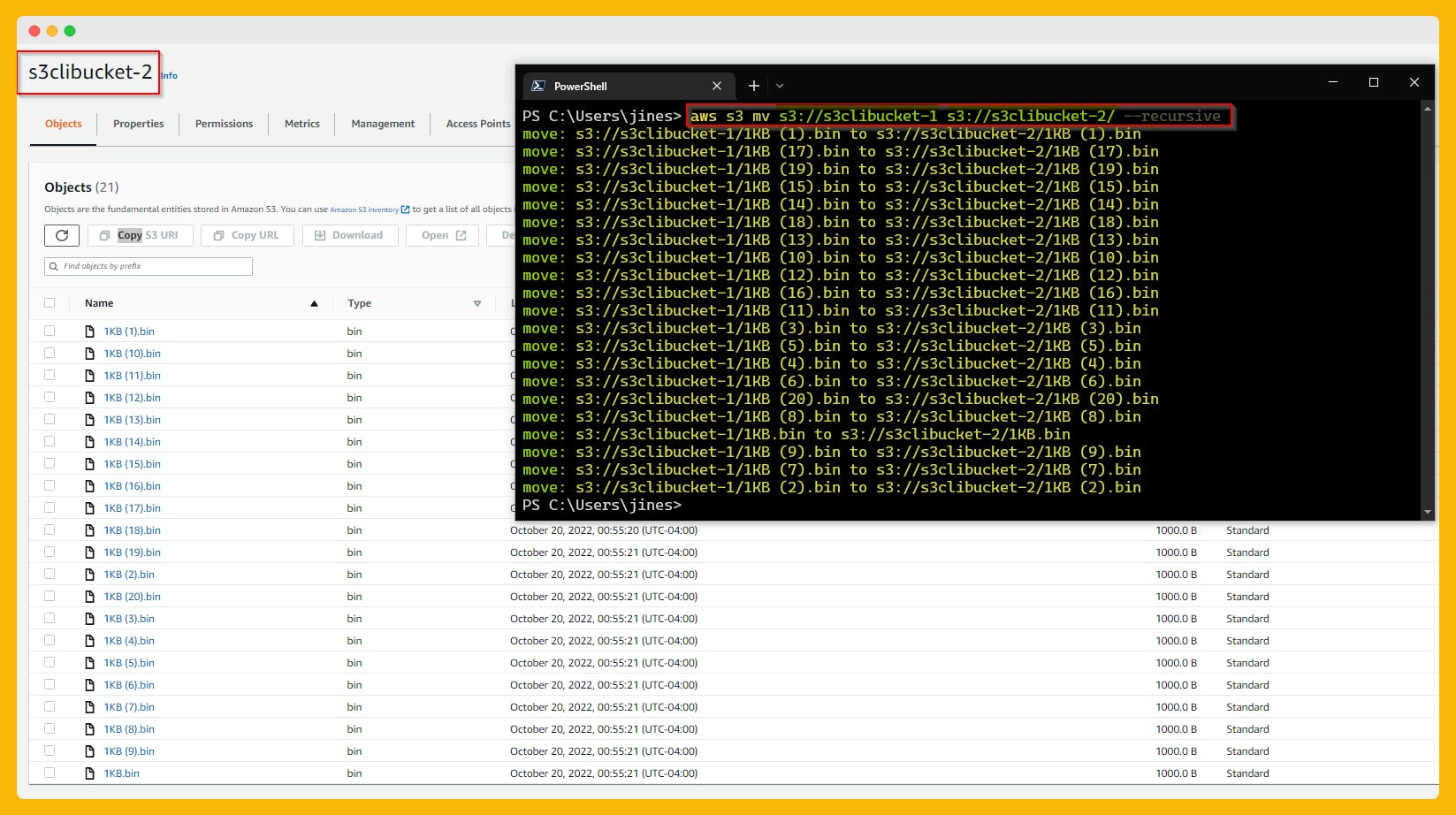

# Move all Objects From one S3 Bucket to another S3 Bucket

aws s3 mv s3://s3clibucket-1 s3://s3clibucket-2 --recursive

# Moves all files but exclude some file with specific extension

aws s3 mv G:\testfolder s3://mybucket/ --recursive --exclude "*.jpg"

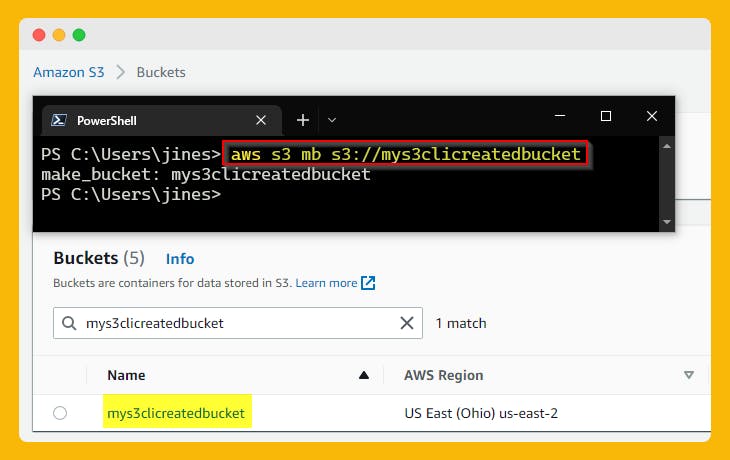

Create an S3 buckets : aws s3 mb

mb (Make Bucket) command creates a bucket.

# Creates a bucket (Unique name in default region)

aws s3 mb s3://myuniquenamebucket

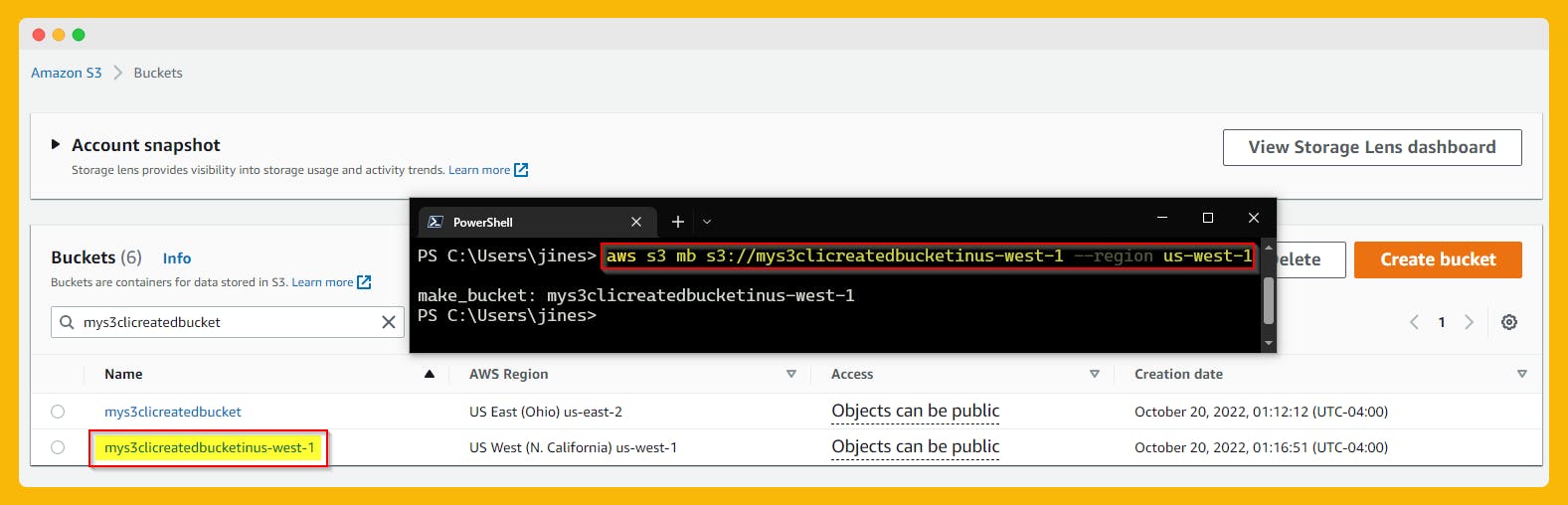

# Create a s3 bucket in a specific Region

aws s3 mb s3://mybucket --region us-west-1

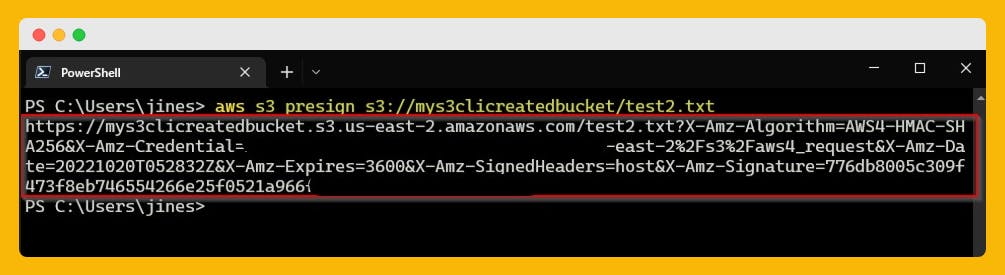

Generate pre-sign URL for S3 Object:

aws s3 presign

# s3 presign url (default 3600 seconds)

aws s3 presign s3://s3clibucket-1/test1.txt

aws s3 presign s3://s3clibucket-2/test2.txt --expires-in 60

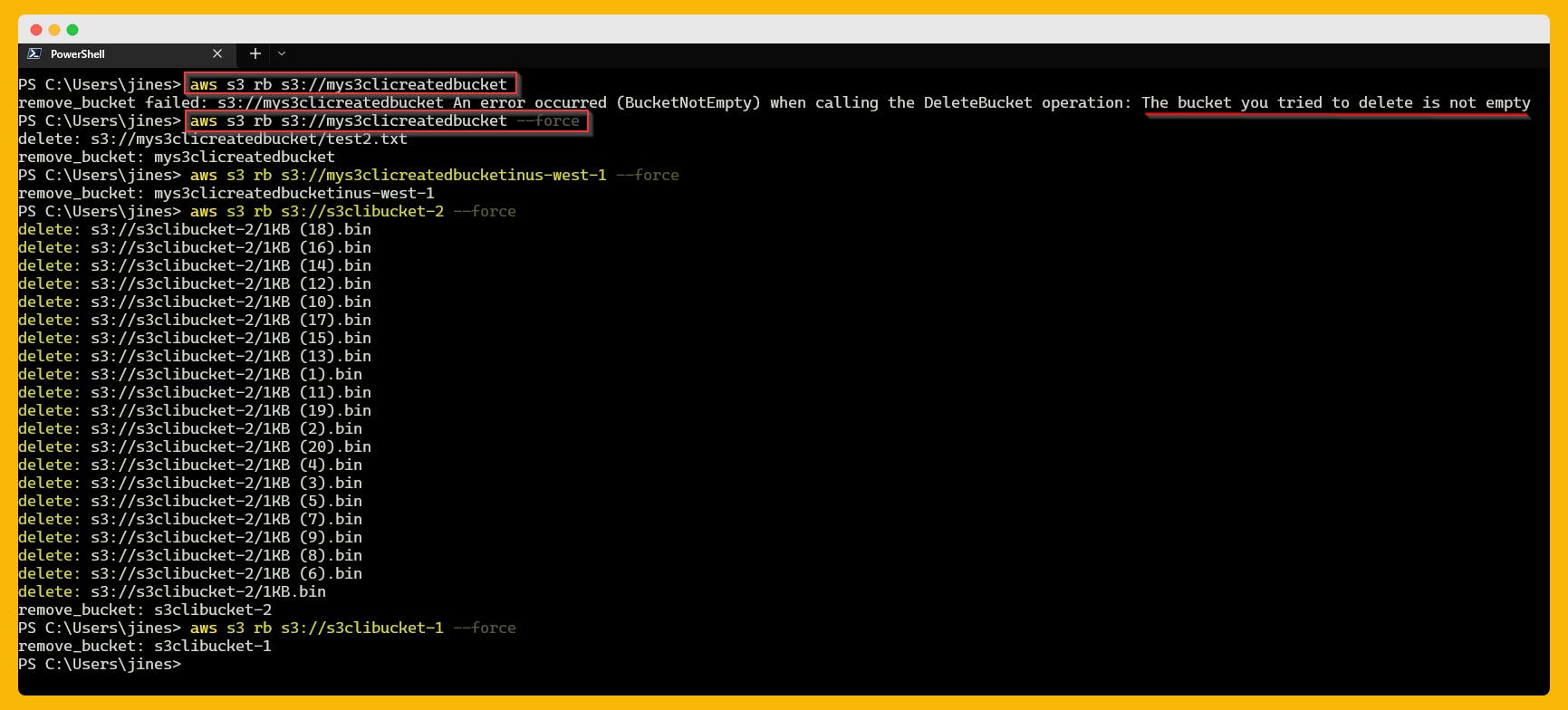

To delete an S3 bucket: aws s3 rb

# Remove an empty bucket

aws s3 rb s3://mybucket

# Remove all the objects forcefully in the bucket and then remove

the bucket itself.

aws s3 rb s3://mybucket --force

To delete S3 object: aws s3 rm

# Delete an object from S3 Bucket

aws s3 rm s3://mybucket/test2.txt

# Recursively deletes all objects under a specified bucket

aws s3 rm s3://mybucket --recursive

# Deletes all objects but excluding some objects

aws s3 rm s3://mybucket/ --recursive --exclude "*.jpg"

aws s3 rm s3://mybucket/ --recursive --exclude "another/*"

S3 Static Website configuration for bucket:

aws s3 website

# Configures a bucket name as a static website

aws s3 website s3://my-bucket/ --index-document

index.html --error-document error.html

To Sync directories and S3 prefixes:

aws s3 sync

# Syncs objects with S3 bucket to local directory

aws s3 sync G:\Testfolder s3://mybucket

# Syncs files between two buckets in different regions

aws s3 sync s3://s3clibucket-1 s3://s3clibucket-2 --source-region us-west-2 --region us-east-1

# Syncs objects under a two different buckets

aws s3 sync s3://s3clibucket-1 s3://s3clibucket-2

# Sync but exclude objects with specific extensionor directory

aws s3 sync G:\Testfolder s3://s3clibucket-1 --exclude "*.jpg"

aws s3 sync s3://s3clibucket-1/ G:\TestFolder --exclude "*another/*"

With this blog, I hope it clears out the starter concepts with AWS S3 CLI Commands and encouraged you to try it out yourself.

Thank you for reading and/or following along with the Blog.

Happy Learning.

Like and Follow for more Azure and AWS Content.

Regards,

Jineshkumar Patel